It’s free, functional, and will be woven into almost every device Apple makes.

“Open Photos. Scroll up. Show numbers. 13.”

Over the years, Apple has frequently highlighted its accessibility work in commercials, but the ad that ran for a minute and a half during game 5 of the NBA Finals was particularly powerful. In it, a man in a wheelchair — Ian Mackay, a disability advocate and outdoor enthusiast — issued the commands above to a waiting iMac. With hardly any delay, the computer did as it was told.

Rather than save Mackay a few mouse clicks, the new version of macOS spared him from having to use a switch controlled by his tongue to interact with a machine. That’s the beauty of the update’s Voice Control system: With the right combination of commands, you can control a Mac, iPhone or iPad with the same level of precision as a finger or a mouse cursor. (Just don’t confuse it with Apple’s earlier Voice Control feature, a now-deprecated tool in older versions of iOS that allowed for rudimentary device interactions.)

Even better, there’s no extra software involved — Voice Control is baked directly into Apple’s forthcoming versions of macOS, iOS and iPadOS, and should be functional in the public beta builds the company will release this summer.

Tools like this aren’t uncommon; Windows 10 has its own voice control system and while it requires more setup that macOS’s approach, it seems to work quite well. We also know that, thanks to its work shrinking machine learning models for voice recognition, Google will release a version of Android that’ll respond to Google Assistant commands near-instantaneously. And more broadly, the rise of smart home gadgetry and virtual assistants have made the idea of talking to machines more palatable. Whether it’s to help enable more people to use their products, or just borne from a need for simplicity, controlling your devices with your voice is only becoming more prevalent.

That’s great news for people like Ian who live with motor impairments that make the traditional use of computers and smartphones difficult. “Whether you have motor impairments or simply have your hands full, accessibility features like voice commands have for a long time made life easier for all device users,” said Priyanka Ghosh, Director of External Affairs at the National Organization on Disability. “It’s terrific to see Apple stepping up in this area, and as technology continues to remove barriers to social connection and productivity, it should also remove barriers to employment.”

The way Voice Control works is straightforward enough: If you’re on an iOS device, you’ll see a tiny blue microphone light up when the software is listening. (By default, it’s set to listen for commands all the time unless you enable a feature that stops the device from recording when you’re not looking at the screen.) On Macs, a small window will appear to confirm your computer can hear you, and spell out your commands so you can tell whether it understood you correctly.

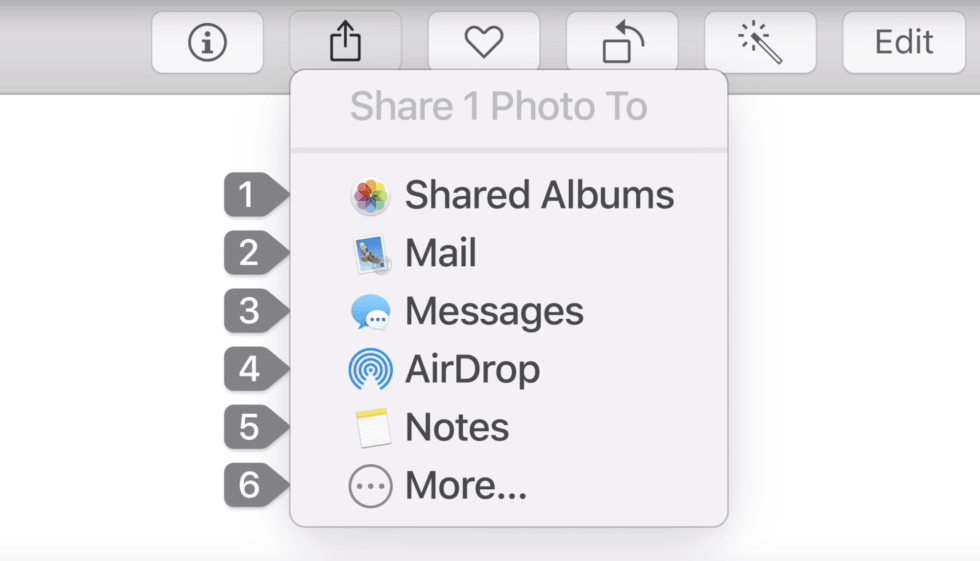

Where Voice Control shines is the sheer granularity of it all. Apple says it’s built on much of the same underlying algorithmic intelligence that powers Siri, so it’s more than adequate for actions like launching apps and transcribing your voice into text. It’s also smart enough to recognize menu items and dialog prompts by name — you can say “tap continue” to accept an app’s terms of service, for instance. Beyond that, though, you can tell Voice Control to “show numbers,” at which point it attaches a number to every single element on-screen you can interact with; from there, you can just say that number to select whatever it was you were looking for.

The controls go deeper still. If, for whatever reason, Voice Control can’t correctly tag an icon or an element on the screen with a number, you can ask it to display a grid instead. Each segment of that grid is tagged with a number you can ask to select; once that’s done, you’ll get another grid that displays an enlarged view of that section of the screen along with more numbers you can ask to interact with. Between Voice Control’s nitty-gritty control options and its underlying understanding of the operating system — mobile or otherwise — it’s running on, virtually nothing can avoid your voice’s reach.

The only real inherent limitation is the time required to get used to Voice Control’s preferred syntax. From what I’ve seen in a guided demo, it handled most casually delivered commands without trouble, but I’d imagine it would take a while to get used to the lengthy strings of commands needed to complete certain tasks. (You can, it should be noted, create Voice Command macros to simplify actions you perform regularly.)

Still, compared to the laborious process of using a modern computing gadget without fine motor control, the depth here seems worth the inevitable mouthful of commands. And remember: This functionality will be available on every device that can run the latest versions of iOS, iPadOS and macOS. That improved quality of life Voice Control makes possible is only made more potent by its wide reach, and its potential for near-immediate utility. Just update your software and you’re all set.

It’ll be months before the feature is officially released as part of Apple’s next round of software releases, but even now, there are some potential caveats worth keeping in mind. When it comes to using Voice Control on a desktop, power isn’t really a concern — the same can’t be said of laptops and iOS devices. (So far, Apple hasn’t said anything specific about Voice Control’s impact on battery life.) Not every app will play nice with Voice Control, either, at least the way they’re laid out now.

Developers who keep accessibility in mind as they craft their software are in a good position — all of their apps’ on-screen elements are probably correctly labeled in their code, which means Voice Control can identify them and make them accessible. Apple doesn’t keep a running list of apps that don’t follow these accessibility best practices, but they’re out there, and trying to use Voice Control could lead to frustration.

While Voice Control technically exists as an accessibility feature, it’s not hard to see it becoming more mainstream in time. In a short demo of the feature, an Apple spokesperson quickly whipped through tasks with minimal hesitation on the software’s part, and I couldn’t help but imagine myself idly directing my computer to respond to tweets with witty rejoinders. And it’s true that some of Apple’s earlier accessibility features have become more widely used, like the on-screen home button that some people use in lieu of the physical one built into older iPhones.

Could Voice Control transcend its niche status and change the way we use our iPhones in the future, perhaps as a part of a more capable Siri? After all, as I mentioned earlier, Google is pushing to make instantaneous voice commands a thing on its own devices thanks to significant improvements to Google Assistant. The answer, for now, is “maybe.”

“I think our main mission of voice control was to make sure that individuals who only have voice as an option to use a device could do so,” Sarah Herrlinger, Apple’s Senior Director of Global Accessibility Policy & Initiatives, told Engadget. “But we want to learn how people use it, and how other individuals might use it and then see how that goes. And it’s one of those things that when you build for the margins, you actually make a better product for the masses.”

Apple seems more than happy to sit back and see how people use Voice Control across all its different devices — if throngs of users without disabilities embrace the feature, that may well mandate a change in Apple’s approach. But even if that never happens, the inclusion of Voice Control across its new software updates remains one of the biggest pro-accessibility moves the company has ever made. Now it just needs to finish iOS 13, iPadOS and macOS Catalina so the people who could benefit from Voice Control can get started with it.